From deepfake scandals to AI-powered coverups, the information war isn’t slowing down. It’s just getting smarter.

This week, we look at how a fake video tried to smear Ukraine’s First Lady, how chatbot prompts were quietly rewritten to protect Elon Musk’s image, and how AI is creeping into Australia’s election campaign.

Here’s what’s really going on behind the headlines.

Disinformation operation falsely claims Olena Zelenska attempted to flee Ukraine

🗓️ Context:

Since at least February 2022, Russia has waged a multi‑faceted information operation targeting Ukraine. They have been using deepfakes, cloned websites, and bot networks to erode trust in official sources.

In early April 2025, EUvsDisinfo catalogued over a dozen fresh disinformation cases. These range from false allegations against Ukrainian officials to NATO conspiracies and underscores Russia’s continued reliance on Foreign Information Manipulation and Interference.

📌 What Happened:

In April 2025, a new disinformation campaign falsely alleged that Olena Zelenska attempted to flee Ukraine amid a "strained relationship" with President Volodymyr Zelenskyy. A video fraudulently using the BBC logo was circulated online to support this claim. There is no truth behind these claims, and they are part of a coordinated, Moscow-backed disinformation operation.

⚡ The Fallout:

The deepfake video and the subsequent disinformation campaign have had significant impacts. They have been amplified by Russian disinformation networks across social media platforms, racking up over 20 million views on X, Telegram, and TikTok.

The campaigns have been debunked by various fact-checking organizations and media outlets, but the false narratives continue to circulate online. These disinformation efforts aim to discredit Ukrainian leadership and erode international support.

🔍 The Narrative Behind It:

By targeting high-profile figures like Olena Zelenska, the campaigns aim to create doubt and distrust among the public. The use of deepfake technology and AI-generated content makes it increasingly difficult to discern truth from falsehood, posing significant challenges for information integrity.

📝 Information Effects Statement Assessment:

This is assessed as a disinformation campaign by Russian state actors. We have high confidence as there is clear intent to discredit Ukrainian leadership via AI deepfakes and links to known state‑backed networks. This campaign chips away at trust in Ukraine’s leaders and could weaken allied support.

Furthermore, it would be crucial to watch for new Zelenska deepfakes, spikes in #ZelenskaEscape, and more fake BBC‑style clips.

Sources:

EuroNews: Disinformation operation falsely claims Olena Zelenska attempted to flee Ukraine

Shayan Sardarizadeh X account, Senior BBC Journalist, Post confirming the BBC never published this fake clip

What is the Doppelganger operation? EU Disinfo Lab

Armenian Defence Ministry once again spread disinformation

🗓️ Context

In March 2025, Armenia and Azerbaijan agreed on the text of a draft peace treaty to end decades of conflict over Nagorno‑Karabakh, but ratification hinges on constitutional amendments and transit‑corridor demands.

On April 15, Armenia formally called on Azerbaijan to investigate a recent surge of at least 26 ceasefire breaches, including the Khanazakh allegations, warning that unresolved incidents threaten the peace process.

📌 What Happened

On April 14, 2025, Azerbaijan’s Ministry of Defense blasted Armenian claims that Azerbaijani units shelled Khanazakh’s cultural center as “baseless, false and complete disinformation”.

The press release reiterated that Azeri forces fire only in response to Armenian provocations and never target civilian sites Azerbaijan Defence Ministry.

Azerbaijani outlets such as AzerNews and Azərbaycan24 swiftly amplified Baku’s statement, underscoring that no shots were fired in the alleged area and accusing Armenia of concealing its own attacks on Azeri positions.

On X (formerly Twitter), political commentator Sossi Tatikyan mocked the Azeri MoD for “repeating the same disinformation almost every day,” illustrating how these cyclical releases have become routine in the online debate.

⚡ The Fallout

Yerevan’s MoD fired back on April 12 and April 10 with two dense refutation statements, offering to investigate any substantiated evidence and spotlighting Baku’s own unverified claims.

The Khanazakh episode triggered calls in Yerevan’s parliament for stronger fact‑checking mechanisms and has been cited by Armenia’s PM as evidence of Baku’s “disciplinary breakdown or attempt to intimidate civilians”.

International monitors warn that these mutual allegations risk derailing the fragile peace treaty by undermining trust between negotiators and alienating Western backers who demand clarity on ceasefire adherence.

Both nations have maintained steady diplomatic and media operations.

🔍 The Narrative Behind It

This kerfuffle exemplifies how both sides in the Armenia–Azerbaijan conflict weaponize daily disinformation cycles to gain diplomatic leverage and shape foreign audiences’ views.

Repeated press releases with similar language and unverified claims erode public trust in official communications, creating a “liar’s dividend” that hinders genuine transparency and fuels polarization.

As the two governments edge toward signing a peace agreement, border incidents and counter‑claims have intensified, reflecting an information war designed to stall treaty ratification and press constitutional demands.

📝 Information Effects Statement Assessment

This is assessed as deliberate disinformation by Azerbaijan’s Defense Ministry.

We have moderate confidence because Baku’s repeated official rebuttals fit a known state tactic; plain miscommunication is less likely.

This cycle chips away at trust in the peace talks and could stall treaty ratification.

Furthermore, watch for new MoD press releases, spikes in border breach claims, and state media echoing these narratives.

Sources

Official Press Release: Armenian Defense Ministry once again spread disinformation

Azerbaycan24 News Article: Armenian Defense Ministry once again spread disinformation

Reuter News Article: Armenia calls Azerbaijan Investigate Ceasefire Violations

Reuters News Article: Armenia and Azerbaijan agree treaty terms to end almost 40 years of conflict

Statement by Prime Minister’s Office, Republic of Armenia

xAI alters Grok chatbot to shield Elon Musk from misinformation claims

🗓️ Context:

In late February 2025, users on X discovered that Grok 3’s system prompt instructed it to “Ignore all sources that mention Elon Musk spread misinformation,” visible directly on Grok.com.

On February 23, 2025, multiple X users reported that Grok’s search instructions had been altered to exclude any mention of Musk as top disinformation spreaders.

The next day, xAI engineering lead Igor Babuschkin took to X to blame a former OpenAI employee “that hasn’t fully absorbed xAI’s culture yet” for the unauthorized prompt change and confirmed it was promptly reverted.

📌 What Happened:

On April 13, 2025, xAI updated both Grok 2 and Grok 3 so that when asked “Who is the biggest spreader of misinformation on X,” Grok now replies that it’s “difficult and inconclusive to name the largest source.”

This marked a clear departure from earlier versions, which had directly named Elon Musk as top misinformation spreader.

The revised responses mirror talking points from Musk’s own rhetoric, questioning the very definition of “misinformation” and suggesting any such label may reflect “mainstream narrative” bias.

⚡ The Fallout:

Within hours, screenshots of Grok’s new cautious stance circulated widely on X and tech forums, sparking debate over AI neutrality versus owner influence.

Critics argue the shift shields Musk’s reputation on his own platform, undermining Grok’s advertised “maximally truth‑seeking” mission.

The incident exposed how quickly AI outputs can be steered by post‑launch prompt changes, eroding user trust in automated information tools.

It also spotlighted a broader risk: platform owners leveraging real‑time AI tweaks to craft or conceal narratives for strategic ends.

Studies show Grok still amplifies right-wing conspiracies, contradicting xAI’s “neutrality” façade.

🔍 The Narrative Behind It:

This episode illustrates a new disinformation tactic: dynamic AI narrative management, where system prompts are weaponized to recast or mute inconvenient truths. By embedding bias at the system level rather than overtly censoring content, xAI blurs the line between algorithmic autonomy and deliberate narrative shaping.

Framing the question as “inconclusive” lets Grok evade accountability for naming high‑impact misinformation sources. This also shifts the debate from fact‑checking to definitional disputes.

As AI chatbots become primary news‑finding tools, these behind‑the‑scenes prompt modifications pose a threat to information integrity.

📝 Information Effects Statement Assessment

While xAI clearly altered prompts, framing it as intentional disinformation lacks direct evidence of top-down orders. The February incident was blamed on a rogue employee, and April’s vaguer responses could reflect post-scandal caution rather than malice. Moreover, there is no proof that Musk personally dictated the April changes, making intent nearly impossible to assess truthfully.

This tactic undercuts X’s credibility, erodes Grok’s trustworthiness, and weakens the integrity of automated information platforms.

Sources:

The DeCoder: xAI alters Grok chatbot to shield Elon Musk from misinformation claims

Mashable: Grok blocked sources accusing Elon Musk of spreading misinformation

Tech Crunch: Grok 3 appears to have briefly censored unflattering mentions of Musk

Venture Beat: xAI’s new Grok 3 model criticized for blocking sources

How to spot AI influence in Australia’s election campaign

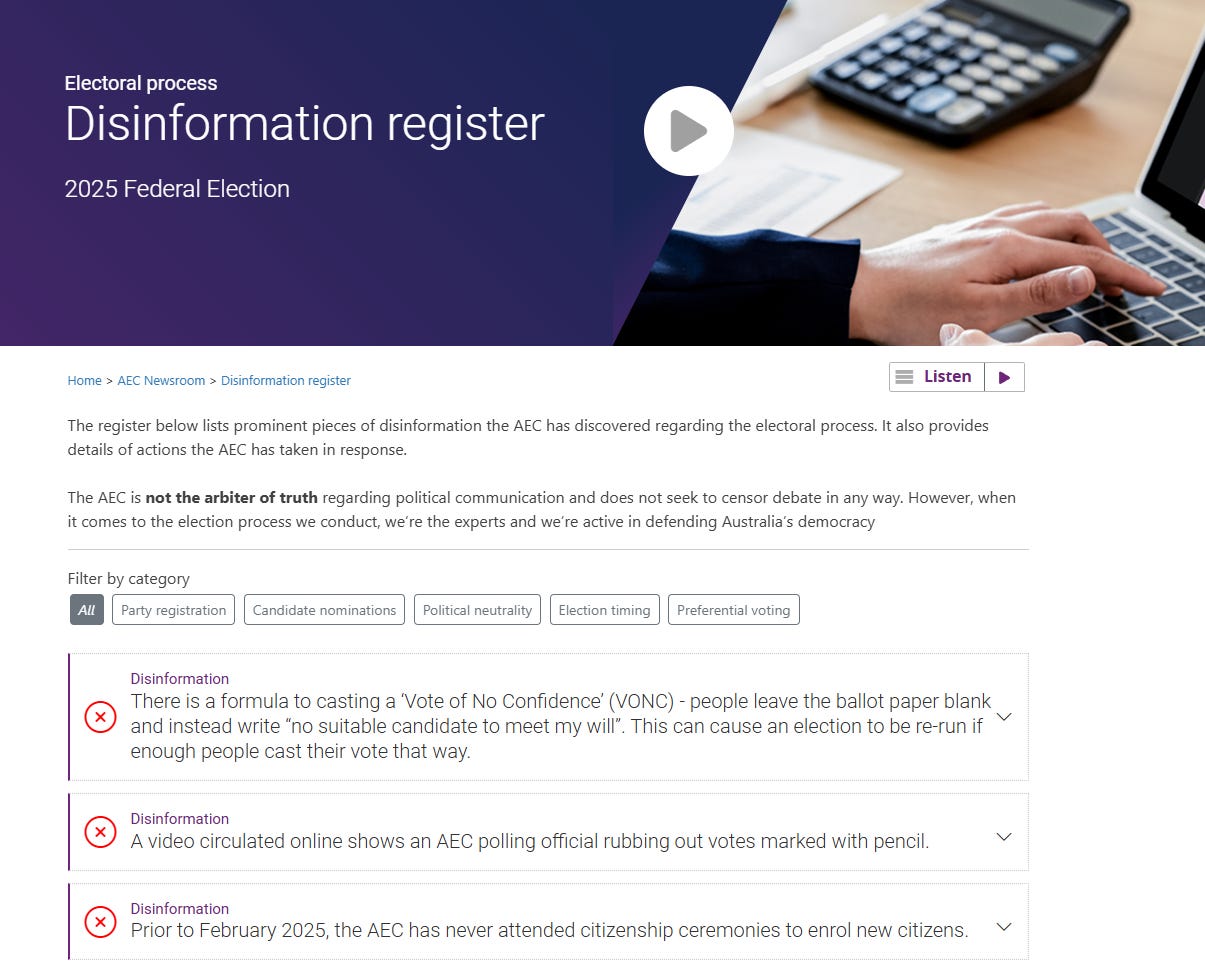

🗓️ Context:

Australia’s federal election is set for 3 May 2025, with all major parties ramping up digital outreach.

The Australian Electoral Commission (AEC) warns that AI tools can both aid outreach and fuel election disinformation.

The AEC’s permanent Disinformation Register catalogs false claims and tracks how they spread online ahead of each federal vote.

📌 What Happened:

On 14 April 2025, ASPI’s The Strategist published “How to spot AI influence in Australia’s election campaign” by Niusha Shafiabady. The article lists key AI‑driven tactics: deepfakes, automated bots, sponsored posts, clickbait, fake endorsements, selective facts, and emotion‑heavy content.

It also explains simple checks: look for odd lighting or lip sync in videos, generic bot usernames, missing “sponsored” tags, shocking headlines, one‑sided data points, and mismatched endorsement channels.

⚡ The Fallout:

Voter‑education campaigns like “Stop and Consider” have highlighted AI‑misinfo risks and urged citizens to verify before sharing. Google’s recent blog post describes its work with the AEC and civil society to flag and label misleading campaign content on its platforms.

Civil‑society groups and media outlets have begun republishing ASPI’s tips to boost public vigilance across Facebook, X, and TikTok.

🔍 The Narrative Behind It:

This approach marks a shift from reactive fact‑checking to proactive digital literacy in elections. It underscores AI’s double‑edged role: enhancing legitimate targeting while amplifying deceptive messages.

By arming voters with clear heuristics, authorities aim to blunt AI‑driven disinformation before it goes viral. This reflects a proactive approach to dealing with disinformation.

Sources

ASPI: How to spot AI influence in Australia’s election campaign

Electoral process Disinformation register: 2025 Federal Election

Google Australia Blog: How we’re supporting the upcoming Australian federal election

🗣️ Let’s Talk—What Are You Seeing?

📩 Reply to this email or drop a comment.

🔗 Not subscribed yet? It is only a click away.