🗣️ Let’s Talk—What Are You Seeing?

📩 Reply to this email or drop a comment.

🔗 Not subscribed yet? It is only a click away.

Welcome to this week’s update on the disinformation stories across our shared information and cognitive warfare domain.

This week I am trying out a new format. The purpose is to make things clearer, actionable while showing the strategic implications.

This round, we’re tracking a viral video that put France’s first couple in the spotlight, a major NATO warning on digital threats, and fresh attempts from Beijing to rattle Taiwan’s sense of security.

These cases show just how fast a rumour or a doctored clip can ripple through headlines and shape what people believe.

Often before the facts even catch up.

Chinese Disinformation Seeks to Cast Doubt on Taiwan’s Defences

◾ 1. Context

China has intensified its use of disinformation and cognitive warfare to undermine Taiwan’s confidence in its own military capabilities.

The main actors are Chinese state-linked entities and nationalist influencers, exploiting vulnerabilities in Taiwan’s information ecosystem. The intent is to erode public trust in Taiwan’s defence, discourage resistance, and probe societal responses to propaganda.

◾ 2. What Happened

In the past week, Chinese disinformation campaigns circulated claims that signal anomalies during tests of Taiwan’s M142 HIMARS rocket systems were caused by Chinese jamming.

Viral aerial photos on Xiaohongshu, supposedly showing Hualien Air Base taken by drone, were later debunked as composites, not genuine drone footage. These narratives gained traction on Chinese and Taiwanese social media, with the Ministry of National Defence forced to publicly refute them.

◾ 3. Nature of Information

This is disinformation: the content about artillery failure is intentionally false and designed to mislead by exaggerating Chinese capabilities and fabricating weaknesses in Taiwan’s defences. The intended audience is likely the Taiwanese public as tensions are already high, suggesting there is a cognitive vulnerability to exploit.

Furthermore, these sources that published the content are not credible. These claims were debunked by Taiwan’s Ministry of National Defence, and the drone images were proven fake. Official statements and independent analysis confirm the information was fabricated and spread with deceptive intent.

◾ 4. Character of Information

Dissemination occurred primarily via Chinese social media platforms like Xiaohongshu, amplified by nationalist influencers and coordinated online groups.

Tactics included doctored imagery, false attribution of technical failures, and emotional hooks portraying Taiwan’s military as ineffective. Evidence of coordinated activity and astroturfing is strong, with repeated themes and rapid amplification across platforms across bot accounts.

◾ 5. Impact & Information Effects Statement

It is assessed with high confidence that Chinese-linked actors’ dissemination of fabricated claims about Taiwan’s defence failures has undermined public trust in Taiwan’s military and government. The likely intent is to discourage resistance and shape perceptions of inevitability regarding Chinese military superiority.

The disinformation campaign fuelled anxiety about Taiwan’s military readiness, forced official denials, and contributed to public confusion. It reinforced long-running narratives of Taiwanese weakness, potentially with the intent to lower morale and trust in the government.

The likely reach was significant within filter bubbles on both sides of the strait and received coverage in Taiwanese media.

◾ 6. Strategic Implications And Lessons Learnt

Information operations and cognitive warfare have repeatedly shown their power to destabilize before any troops cross a border. In Crimea, Russian-backed disinformation and manipulation of local narratives created confusion and eroded trust in Ukrainian institutions, setting the stage for military intervention and eventual annexation.

China’s ongoing campaigns against Taiwan follow a similar logic: shaping perceptions, weakening public confidence, and laying the groundwork for any future action. This makes escalation appear more justified or even inevitable to both domestic and global observers.

Over-reliance on media literacy or debunking false claims alone risks underestimating the threat. Disinformation campaigns are rarely an end in themselves; they are often the opening move in a broader strategy that may include physical confrontation.

Sources

Taipei Times: No China interference in tests: MND

Central European Institute of Asian Studies: A threat from within: Chinese espionage in Taiwan

New Bloom Magazine: Chinese Disinformation Seeks to Cast Doubt on Taiwan’s Defenses

Shadows of Doubt: Disinformation Fueling National Security Anxiety (February–April 2025)

NATO Assembly Calls for Decisive Action on Disinformation

◾ 1. Context

NATO and Europe have faced a surge in cyberattacks and coordinated disinformation campaigns, especially from Russia and China. They have targeted elections, critical infrastructure, and public trust.

The Alliance’s vulnerabilities include fragmented information environments, algorithmic manipulation, and the rapid spread of false narratives on social media.

The intent behind these disinformation actions is to destabilize democratic societies, erode faith in institutions, and weaken NATO’s collective response. Furthermore, there are consistent messages about the strength of the Russian Federation over the NATO alliance.

◾ 2. What Happened

In May 2025, the NATO Parliamentary Assembly called for stronger action against cyberattacks and disinformation, citing recent incidents such as the coordinated digital interference in Romania’s 2024 presidential election.

This Romanian disinformation campaign used TikTok and Telegram to amplify pro-Russian narratives, leveraging algorithmic manipulation and external actors to boost polarizing content.

◾ 3. Nature of Information

The information about the Assembly’s resolution and the cited threats are factual, sourced from official NATO documents and public statements.

The disinformation referenced in the resolution refers to coordinated, intentionally false narratives spread by Russian actors, confirmed by NATO and independent cybersecurity organizations. These have been observed and noted by various intelligence agencies, news agencies and public discourse.

◾ 4. Character of Information

Disinformation campaigns referenced by NATO have used coordinated social media posts, fake news sites, and cyberattacks to spread false claims about NATO operations, Ukraine, and member governments.

Tactics include bot amplification, deepfakes, and exploiting divisive issues within societies. There is strong evidence of cross-platform, state-linked coordination and repeated targeting of vulnerable audiences.

It has got to the point that the armed services are now requested to impose defensive measures.

◾ 5. Impact & Information Effects Statement

These campaigns have eroded trust in NATO institutions, influenced public debates, and complicated support for Ukraine. The Assembly’s resolution reflects growing concern over the scale and sophistication of hostile information operations.

It is assessed with high confidence that Russian-linked actors’ dissemination of cyber and disinformation threats has undermined allied trust and cohesion, likely intended to weaken NATO’s collective resolve and support for Ukraine.

◾ 6. Strategic Implications And Lessons Learnt

Individual awareness and media literacy, while important, are not enough on their own. NATO’s recent experiences highlight the need for strong institutional frameworks. Creating dedicated threat intelligence hubs and fostering international alliances are essential steps to detect and counter coordinated disinformation.

The recent surge in cyberattacks and disinformation is about more than just spreading confusion. These tactics are part of a broader strategy to undermine collective security and lay the groundwork for possible physical confrontation. The case of Russian-linked actors targeting critical moments in Romania’s elections shows that information operations often precede or accompany other forms of aggression.

Given the cross-border nature of these threats, international cooperation is vital. Effective defence requires real-time intelligence sharing and coordinated action across cyber, diplomatic, and information domains. Only by working together can democracies hope to mount an effective response.

Modern conflict now extends far beyond physical battlefields. NATO’s increasing focus on information resilience signals a recognition that cognitive and digital warfare are as consequential as traditional military threats.

Sources

NATO Parliamentary Assembly Press Release, 27 May 2025

NATO Review: Disinformation Threats

Official Resolution: NATO PA 2025 Spring Session

Reuters: NATO Assembly Urges Action on Cyber, Disinformation

Macron Dismisses Video Showing Apparent Shove from Wife Brigitte

◾ 1. Context

French President Emmanuel Macron’s public appearances, especially with his wife Brigitte, are frequent targets for scrutiny and manipulation due to being in a position of considerable influence.

Hostile actors, including pro-Russian and anti-Macron networks, exploit such moments to undermine his image. The goal is to fuel negative narratives and distract from policy issues, leveraging public curiosity about the couple.

◾ 2. What Happened

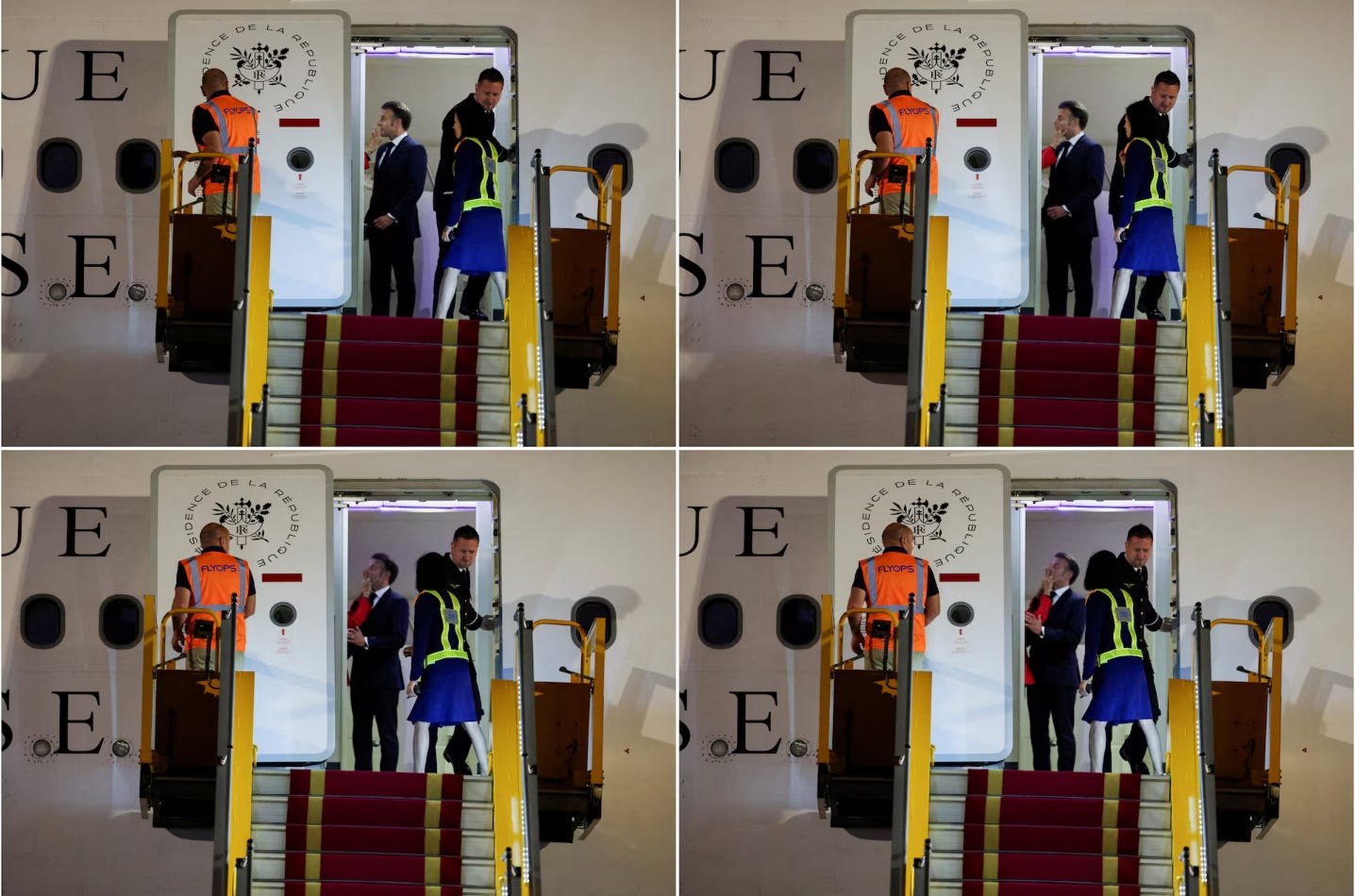

A short video showing Brigitte Macron touching President Macron’s face as they exited a plane in Vietnam went viral in late May 2025.

The authentic clip was rapidly shared across social media, with some accounts framing it as evidence of marital discord or humiliation. Coordinated sharing and misleading commentary amplified the narrative, turning a real moment into a trending controversy.

◾ 3. Nature of Information

This incident began as malinformation: a genuine video is used circulated and frame an embarrassment and attack reputation.

It shifted into disinformation when actors amplified false or misleading claims, intentionally distorting the context to deceive and provoke.

The thesis is strong as the video is real but the narrative is fabricated and confirmed as such by multiple fact-checks.

◾ 4. Character of Information

The story spread on platforms like X (Twitter), Telegram, and TikTok, using selective editing, misleading captions, and emotional hooks.

Pro-Russian and anti-Macron accounts pushed the narrative, blending humour, mockery, and conspiracy to maximize engagement. Evidence points to coordinated amplification, opportunistic personal attacks, and narrative shaping.

◾ 5. Impact & Information Effects Statement

The viral spread fuelled speculation about Macron’s personal life, distracted from his diplomatic agenda, and eroded trust among some viewers. It provided ammunition for hostile information operations targeting French and international audiences.

It is assessed with high confidence that pro-Russian and anti-Macron actors’ leveraging of genuine video (malinformation) into a false narrative (disinformation) has undermined Macron’s public image and distracted from substantive policy issues. Furthermore, the intention is to weaken his perceived authority.

◾ 6. Strategic Implications and Lessons Learned

Hostile actors are turning real moments into weapons by twisting authentic footage and spreading damaging narratives at speed. This goes beyond simple reputation attacks and becomes a deliberate effort to undermine leadership, distract from critical issues, and weaken public trust.

The rapid spread of these manipulated stories often outpaces traditional fact-checking and official responses.

If institutions and individuals do not adapt by detecting and responding to these threats quickly, we risk losing control of the information environment. The credibility of leaders, the strength of institutions, and the resilience of society itself are all at stake.

Recognizing this as a fundamental security challenge is essential for anyone in a position of responsibility.