🗣️ Let’s Talk—What Are You Seeing?

📩 Reply to this email or drop a comment.

🔗 Not subscribed yet? It is only a click away.

Welcome to This Week In Disinformation.

This week, truth blurred as AI-generated news clones, viral TikTok “cures,” and coordinated digital deception campaigns outpaced the facts.

In Francophone Africa, fake news sites mimicking trusted French outlets spread confusion and eroded faith in real journalism.

On TikTok, mental health misinformation reached millions, offering false hope and risking real harm.

Meanwhile, in India, military leaders reported diverting significant resources to fight a flood of conflict-related disinformation.

Read on with the new format to keep you informed.

And show your support for the truth.

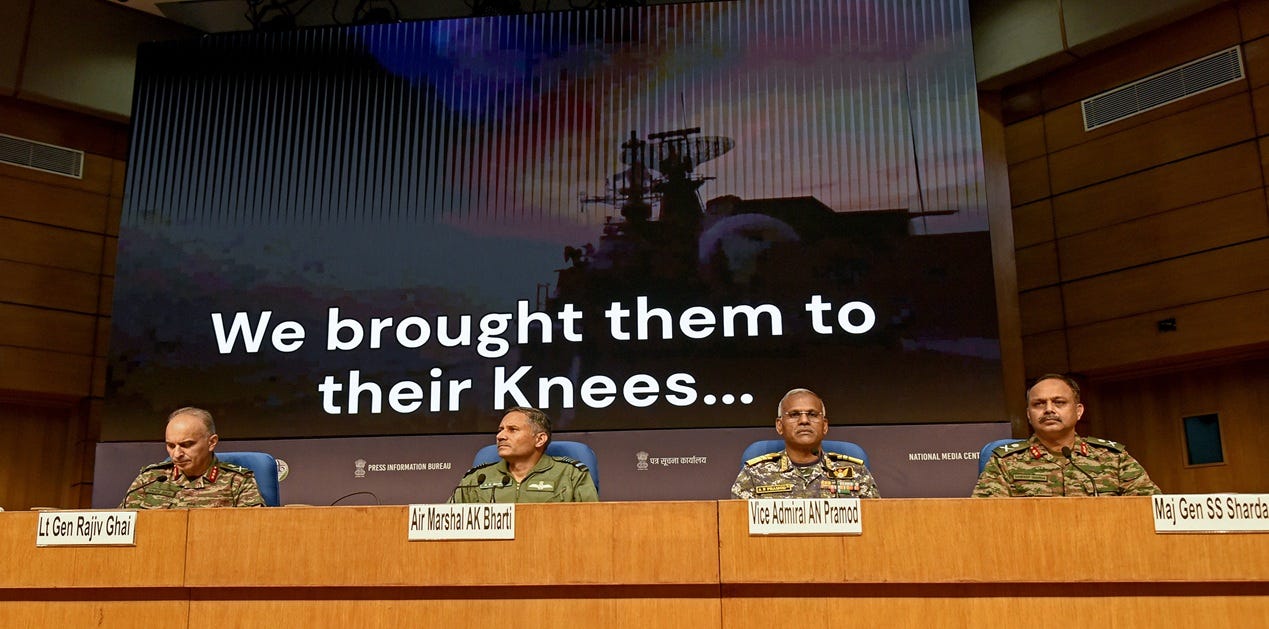

Operation Sindoor: The Operational Cost Of Countering Disinformation

◾ 1. Context

Operation Sindoor began in May 2025 after a terror attack in Pahalgam. India and Pakistan became key actors.

The intent behind the disinformation was to confuse, damage morale, and erode legitimacy.

◾ 2. What Happened

Recently, Indian military leaders said 15% of resources were diverted to fight disinformation.

These included false claims about military losses and attacks on religious sites. These stories spread quickly on social media, messaging platforms, and news channels. Often these were boosted by bots and influencers.

◾ 3. Nature of Information

Most false content was disinformation, created to mislead and manipulate during the conflict. Some misinformation was shared by accident.

The main aim was to disrupt Indian operations and sway global opinion.

◾ 4. Character of Information

False claims spread on X (Twitter), WhatsApp, and major news channels. Tactics included AI-generated content, bot networks, and influencer amplification.

◾ 5. Impact & Information Effects Statement

The campaign forced India to spend 15% of operational resources on information control, slowing decisions and harming communication. This means that a substantial portion of personnel, intelligence, and communications resources were tied up in monitoring, debunking, and responding to disinformation.

Furthermore, commanders had to split their attention between battlefield management and information control. This dilutes focus and potentially slowing operational tempo.

Public confusion and trust issues followed, with some false stories trending and covered by global media.

◾ 6. Strategic Implications And Lessons Learnt

Operation Sindoor shows that information warfare will drain resources during military operations. This operation highlights that information warfare is now a core battlefield domain alongside land, air, sea, space, and cyber.

The mix of facts and fiction in real time challenges old defence ideas and requires new information strategies.

sources

Stimson Center: "Four Days in May: The India-Pakistan Crisis of 2025" – Provides a detailed chronology of Operation Sindoor, the actors involved, and the escalation dynamics

Deccan Herald: "Pakistani Document Reveals India's Expanded Strike Targets in Operation Sindoor" – Details about strike targets and competing narratives over civilian and military casualties

RUSI: "Calibrated Force: Operation Sindoor and the Future of Indian Deterrence" – Analysis of India's operational objectives and information warfare context

Times of India: "Operation Sindoor: 9 aircraft lost, drones destroyed, missiles blocked" – Reports on operational outcomes, losses, and information environment claims

VIF India: "Operation Sindoor and the Battle of Perception: How Pakistan Skewed the Story" – Focuses on narrative shaping, propaganda, and the information battle during the operation

Ministry of External Affairs, India: "Transcript of Special briefing on OPERATION SINDOOR (May 08, 2025)" – Official government briefing transcript on the operation

More Than Half Of Top 100 Mental Health TikToks Contain Misinformation

◾ 1. Context

TikTok is widely used by teens and young adults for mental health advice. Key actors include content creators, influencers, and viewers seeking quick solutions.

The platform’s design favours short, viral videos, making it easy for unverified advice to spread.

◾ 2. What Happened

The Guardian conducted an analysis reviewed the top 100 #mentalhealthtips TikToks. Experts found 52 videos contained false or misleading advice about trauma, anxiety, and depression.

Claims included “eating an orange in the shower cures anxiety” and “holy basil heals trauma in an hour.”

◾ 3. Nature of Information

Most flagged content is misinformation: false or oversimplified information shared without intent to harm. Some creators exaggerated or pathologized normal emotions, confusing viewers.

The aim appears to be engagement, not deliberate deception.

◾ 4. Character of Information

The misinformation spread on TikTok using hashtags like #mentalhealthtips. Tactics included catchy visuals, personal anecdotes, and oversimplified “hacks.”

No evidence of coordinated disinformation, but influencer amplification and algorithmic boosts increased reach.

◾ 5. Impact & Information Effects Statement

Teens and young adults were most affected, risking confusion and self-misdiagnosis. Harm includes trivializing real mental illness and spreading unproven remedies.

TikTok’s engagement-driven algorithms act as accelerants for misinformation, creating echo chambers where falsehoods are rapidly recycled and reinforced. This dynamic undermines traditional information gatekeeping and makes containment exponentially harder.

More concerningly, repeated exposure to catchy, simplistic “hacks” can create behavioural inertia. This is where users may become resistant to more nuanced or evidence-based interventions, even when presented with credible alternatives.

◾ 6. Strategic Implications And Lessons Learnt

Public health must adopt an information warfare posture, treating viral misinformation as an adversarial threat requiring intelligence gathering, rapid counter-messaging, and even “pre-bunking”.

Traditional safeguards like expert review are bypassed, challenging public health efforts. Rather than relying only on official channels, public health could co-opt popular creators. The idea is to train and incentivize them to act as “trusted nodes” who can rapidly debunk and drown out harmful narratives within their own communities.

The trend matters for personal safety and public trust, as unchecked health misinformation can shape beliefs and behaviours worldwide.

Sources

The Guardian: More than half of top 100 mental health TikToks contain misinformation, study finds

New York Post: Mental health misinformation on TikTok is at an all-time high, and poses a huge risk to struggling users, experts warn

engaget: TikTok ripe with mental health misinformation, new study reports

Weaponised Storytelling: AI Joins The Fight Against Disinformation

◾ 1. Context

Disinformation campaigns have long used storytelling to sway public opinion. The rise of AI has made narrative manipulation more complex and scalable.

Researchers at Florida International University (FIU) are developing AI tools to detect these campaigns by analysing story structures, personas, and cultural cues.

◾ 2. What Happened

FIU’s Cognition, Narrative and Culture Lab trained AI to spot coordinated disinformation by mapping narrative arcs and tracking how stories spread. The tools process large volumes of social media posts, flagging suspiciously similar or fast-moving storylines.

This approach helps analysts and crisis responders identify and counter harmful narratives in real time.

◾ 3. Nature of Information

The article is factual, reporting on research and tool development to counter disinformation.

The report describes how AI can distinguish between genuine and orchestrated narratives.

◾ 4. Character of Information

The research targets platforms like social media, where disinformation spreads rapidly. AI tools use narrative analysis, persona tracking, and timeline mapping to detect manipulation.

It leverages known tactics to find disinformation narratives.

◾ 5. Impact & Information Effects Statement

AI-driven narrative analysis helps intelligence and crisis-response teams respond faster to disinformation, reducing harm and confusion.

These AI tools reveal how fake stories are built and spread, making it much harder for bad actors to hide. Disinformation campaigns that used to go unnoticed are now easier to spot and stop.

As defenders use smarter AI to catch fake stories, attackers will also upgrade their tactics. This creates a constant back-and-forth battle, which will create an arms race within the sphere of disinformation.

◾ 6. Strategic Implications And Lessons Learnt

AI that understands storytelling marks a shift in disinformation detection, moving beyond keyword spotting to deeper context analysis. This approach challenges old detection models and supports real-time countermeasures.

Quickly spotting and stopping harmful stories online is now just as important for national security as defending against cyberattacks or physical threats.

As AI-generated narratives grow more sophisticated, resilience depends on rapid detection, transparency, and public education. This trend matters for national security and the integrity of global information ecosystems.

Source

FIU News: Weaponized storytelling: How AI is helping researchers sniff out disinformation campaigns

Generative AI Clones French News Media in French-speaking Africa

◾ 1. Context

French-speaking Africa relies heavily on French media for news.

Unidentified actors have used generative AI to mimic trusted French outlets amid weak media literacy and fragmented regulations. The intent appears to be manipulating public opinion and weakening French influence.

◾ 2. What Happened

In late May 2025, Reporters Without Borders revealed a campaign using AI to clone French news brands and produce fake articles.

These appeared on social media and fake websites, spreading false stories targeting Cameroon and nearby countries. The campaign reached thousands and gained traction through trusted branding.

◾ 3. Nature of Information

This is disinformation, deliberately false content designed to deceive by impersonating real media.

The aim is to mislead and confuse audiences.

◾ 4. Character of Information

AI-generated fake articles, logos, and layouts were shared on social platforms and messaging apps.

Tactics included mimicking journalistic style and exploiting brand trust, with signs of coordinated amplification.

◾ 5. Impact & Information Effects Statement

The campaign sowed confusion and undermined trust in legitimate media, influencing public views on France and local politics. Furthermore, this campaign measurably weakened French soft power and influence in Francophone Africa.

Newsrooms were forced to divert resources from reporting to crisis management and debunking, weakening their ability to inform the public and respond to fast-moving events.

It is assessed with high confidence that these actors’ disinformation has distorted public debate and weakened French influence.

◾ 6. Strategic Implications And Lessons Learnt

This case shows AI’s power to scale disinformation and bypass defences. It demands stronger digital literacy, fact-checking, and cross-border cooperation. Newsrooms and platforms must improve authentication tools.

Traditional fact-checking and public warnings are now too slow and reactive. Media organizations and governments must shift to active threat hunting. Furthermore, news outlets can no longer rely on reputation alone.

No single newsroom or country can handle this alone. Francophone African states, France, and international partners should create joint task forces for real-time intelligence sharing, coordinated takedowns, and unified public messaging.

The incident signals a new phase in information warfare threatening public trust and regional stability.