🗣️ Let’s Talk—What Are You Seeing?

📩 Reply to this email or drop a comment.

🔗 Not subscribed yet? It is only a click away.

Brace yourselves.

The information landscape is fracturing more due to Russia’s actions. This week offers a stark look at how swiftly information warfare is escalating, pushing beyond tactics into truly dangerous territory.

We're seeing Russia not just weaponizing AI against Western democracies, but systematically turning trusted news channels into conduits for propaganda, all while relentlessly destabilizing its neighbours.

If you're trying to make sense of this new front in the war for truth, this is your essential brief.

Welcome to this week in disinformation.

Moldova Is The Testing Ground For Russian Disinformation

◾ 1. Context

Moldova faces crucial elections, with its pro-EU path challenged by Russian interference. Russia, through proxies like Ilan Shor, seeks to destabilize Moldova and undermine its European integration.

This aims to maintain Russian influence in the region.

◾ 2. What Happened

The Kremlin-backed "Matryoshka" network launched a disinformation blitz against Moldovan President Maia Sandu before the July 4, 2025, EU-Moldova Summit.

They spread fabricated videos, including a fake clip about EU leaders not attending and a false Euronews report on a "terrorist threat."

◾ 3. Nature of Information

Russia’s actions are disinformation, intentionally false content designed to deceive. Its goal is to undermine Moldova's EU integration and discredit pro-Western leaders.

Sources were impersonated for fake credibility which has a clear intent through design.

◾ 4. Character of Information

Disinformation spread primarily via social media by the "Matryoshka" network. It used coordinated phases, with initial posts of fake news and images.

Tactics included impersonating news outlets and exploiting fears, showing coordinated amplification.

◾ 5. Impact & Information Effects Statement

The disinformation aims to erode public trust in Moldovan democracy and increase euroskepticism. It impacts social media users, with narratives designed to exploit existing societal divisions.

It is assessed with high confidence that Russia’s dissemination of fabricated videos and false claims has sowed confusion and undermined public trust in

Moldova’s government and its EU integration process, likely intended to deter the country ahead of upcoming elections.

◾ 6. Strategic Implications And Lessons Learnt

Moldova's experience shows how they influence democratic processes and geopolitical alignment. Historically, this reflects attempts to maintain influence without direct military action.

To build resilience, individuals need media literacy. Institutions must invest in cybersecurity and disinformation monitoring. Alliances should share intelligence on emerging tactics like deepfakes.

Sources:

Matryoshka's Moldovan manipulation

Moldova Is the Testing Ground for Russia's Disinformation Machine

The bear behind the ballot: Moldova's election in the shadow of war

Moscow Times: Opinion Moldova Is the Testing Ground for Russia's Disinformation Machine

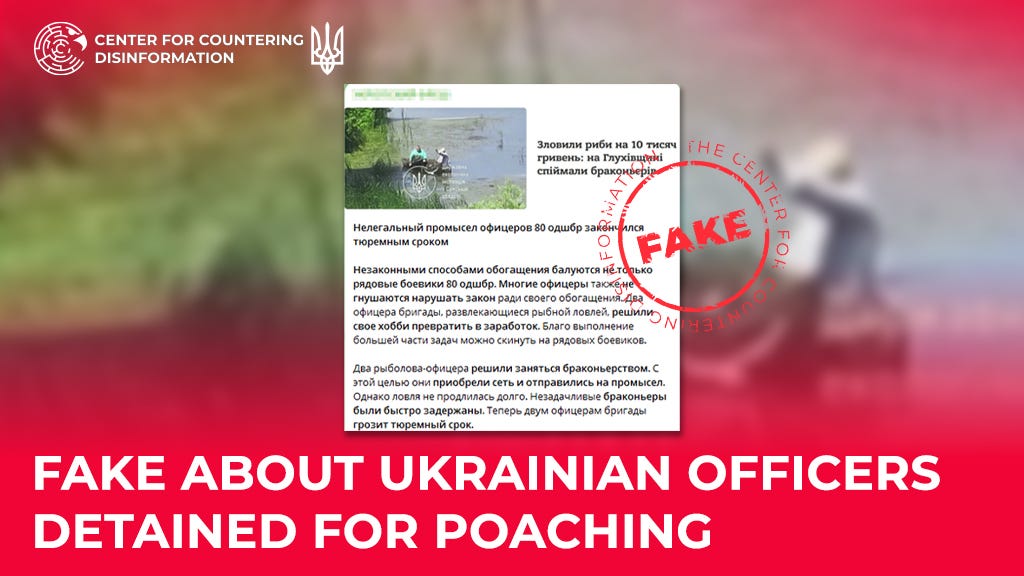

Russian Propaganda Falsely Accuses Ukrainian Officers of Poaching

◾ 1. Context

Ongoing conflict between Ukraine and Russia has intensified information warfare. Russian propaganda frequently aims to discredit Ukrainian servicemen and sow discord between the military and civilians.

This creates a fertile ground for fabricated narratives to undermine trust in Ukraine's defence forces.

◾ 2. What Happened

Pro-Kremlin Telegram channels recently spread a false story about two Ukrainian officers from the 80th Separate Air Assault Brigade being detained for poaching in the Sumy region.

They used a screenshot of a real Ukrainian media headline to illustrate their posts.

However, the original article had no mention of military personnel.

◾ 3. Nature of Information

This information is disinformation, as it is intentionally false and designed to deceive. The intent is to smear Ukrainian soldiers and erode public trust in them.

It has been confirmed that Russian propagandists fabricated the detail about military involvement.

◾ 4. Character of Information

The false claims were disseminated primarily through pro-Kremlin Telegram channels. The tactic involved taking a real news headline and adding fabricated details to create a false narrative.

This manipulates existing legitimate news to give the disinformation a veneer of truth, a common method of coordinated propaganda.

◾ 5. Impact & Information Effects Statement

This disinformation aims to create a negative image of Ukrainian servicemen, fostering distrust among the civilian population and undermining support for the military. Such narratives contribute to internal divisions within Ukraine. The reach targets Ukrainian and international audiences, seeking to degrade perception.

It is assessed with high confidence that Russia’s dissemination of fabricated claims about Ukrainian officers being detained for poaching has attempted to sow discord between Ukrainian civilians and the military. This is likely intended to undermine trust in Ukraine's Defence Forces.

◾ 6. Strategic Implications And Lessons Learnt

This incident illustrates how hybrid threats exploit existing media landscapes to sow internal division and weaken a nation's resolve. Historically, wartime propaganda has always sought to demoralize the enemy and fracture domestic support.

Sources:

Center for Countering Disinformation, Fake news about Ukrainian officers detained for poaching

Government Of Canada: Countering disinformation with facts - Russian invasion of Ukraine

AI-Powered Russian Disinformation Targets UK

◾ 1. Context

The UK is a prime target for Russian influence operations, aimed at undermining trust in democratic institutions and weakening alliances.

The rapid advancement of Artificial Intelligence (AI), particularly generative AI, has provided new tools for these campaigns. Russia seeks to leverage AI as a "force multiplier" in its information warfare, automating content production and increasing reach.

◾ 2. What Happened

A recent Royal United Services Institute (RUSI) report details how Russia is actively "weaponizing AI" to target the UK with disinformation.

This involves using automated tools to generate fake articles, social media posts, images, and deepfakes.

Operations like "DoppelGänger" exemplify this by mimicking legitimate Western news outlets to erode trust and spread confusion at scale.

◾ 3. Nature of Information

This involves large-scale disinformation, as it is intentionally false content generated by AI and aimed at deceiving target audiences. The intent is to manipulate public opinion, exacerbate internal divisions, and weaken the UK's social and political cohesion.

However, the reporting by RUSI is true.

◾ 4. Character of Information

The disinformation is disseminated through various online platforms, primarily leveraging AI-enhanced bots and automated social media accounts.

Manipulation strategies include creating synthetic content (fake articles, manipulated images, deepfakes) to flood online discourse and mimic legitimate sources.

This shows evidence of coordinated activity, moving beyond human-generated content to automated, high-volume campaigns.

◾ 5. Impact & Information Effects Statement

This AI-driven disinformation aims to erode trust in democratic institutions, deepen societal divisions, and potentially influence public attitudes towards key policy issues. It complicates unified responses to Russian actions and risks trapping individuals in echo chambers. The potential reach is vast, with AI enabling the rapid and widespread dissemination of tailored false narratives.

It is assessed with High confidence that Russia’s weaponization of AI to generate and disseminate disinformation has intensified efforts to erode public trust and exacerbate divisions within the UK, likely intended to undermine democratic processes and weaken national resilience.

◾ 6. Strategic Implications And Lessons Learnt

The use of AI in disinformation has fundamentally altered information warfare, challenging traditional cyber security and information perception. This pushes information manipulation beyond human scale, making detection and debunking significantly harder.