This Week In Disinformation

18 - 24 Jan 2026

🗣️ Let’s Talk—What Are You Seeing?

📩 Reply to this email or drop a comment.

🔗 Not subscribed yet? It is only a click away.

From a fake Macron war speech to vanished Iranian bots and AI‑made Aussie outrage bait, this week shows how disinformation is still at play.

Welcome To This Week In Disinformation.

Macron Did Not Declare War Readiness Against Putin And Trump

◾ 1. BLUF

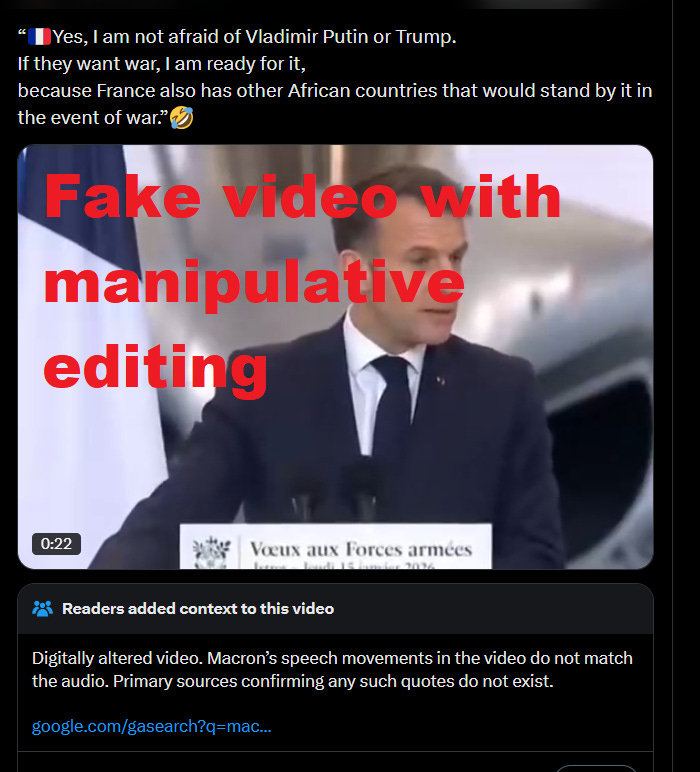

On 20 Jan 2026, the X account SilentlySirs posted a deepfake video falsely claiming French President Emmanuel Macron declared war readiness against Putin and Trump, backed by African allies, which amassed 2.2 million views in days.

This case highlights the accelerating use of AI dubbing tactics that inflame Europe-Africa tensions amid France’s setbacks in the Sahel and Trump’s 2025 return, testing platform defences and diplomatic cohesion.

◾ 2. Context

France grapples with eroding influence in Africa, where juntas in Mali, Niger, and Burkina Faso have expelled French forces since 2022. Macron’s public criticisms of this shift fuel resentment, creating fertile ground for narratives portraying France as a neo-colonial aggressor.

X’s environment amplifies this: algorithms favour outrage-driven videos, while community notes lag behind viral peaks, often after millions of impressions. Pro-Lebanon and resistance accounts, vocal on occupation and Western interference, thrive here, blending genuine grievances with unverified claims.

Against this backdrop, synthetic media slips through as ‘evidence’ of French overreach.

◾ 3. What Happened

SilentlySirs, a Lebanon-focused account with 127k followers, uploaded the video at 11:54 on 20 Jan 2026. It dubbed audio over Macron footage: ‘Yes, I am not afraid of Vladimir Putin or Trump. If they want war, I am ready for it, because France also has other African countries that would stand by it.’

The post exploded to 2.2 million views by 23 Jan, drawing replies that split roughly 40% mocking Macron (Napoleon quips, language jabs), 30% fact-checking (lip-sync flags), 20% endorsing (African tie-cuts), and 10% neutral.

X added a community note later that this is a ‘digitally altered video. Macron’s speech movements do not match the audio. Primary sources confirming any such quotes do not exist.’

There was no mainstream pickup, but amplification remained within conflict-echo niches.

◾ 4. Classification

This incident qualifies as disinformation.

The core claim crumbles under scrutiny: no matching speeches exist, lip-sync fails forensic checks, and Macron’s real comments about Africa critique Russian meddling without belligerence.

Deliberate fabrication is evident in the account’s advocacy bio (’supporting resistance against occupation’), precise targeting of France-Russia-Africa flashpoints, and persistence despite the note, indicating intent to deceive rather than mere error.

◾ 5. Tactics and Methods

The user selected the video for its visceral impact, with lips nearly in sync, triggering quick shares before doubt sets in, much like a ventriloquist fooling the eye from afar.

X is perfectly suited for this. The video was achieved a 2.2 million impressions and it still climbing. Basic AI dubbing tools, now free and fast, enabled solo production.

Content stirs tribal fury as it frames Macron as an arrogant warmonger, referencing Sahel humiliations and Trump bravado, exploiting confirmation bias in anti-West crowds.

No bot swarms were evident, but niche seeding (pro-Russia accounts) sustained heat. Friction arose via the note, curbing retweets, yet sentiment lingers: 20% believers in biased pockets indicate partial success.

◾ 6. Implications

This deepfake revises a known playbook of dubbed leader clips by linking it to timely geopolitics: France’s retreat from Africa coincides with Trump’s orbit.

Unlike prior fakes (coup hoaxes, apologies), it name-drops Putin and Trump, probing NATO rifts post-2025 US shift. The tactical advantage lies in accessibility; off-the-shelf AI allows mid-tier actors like SilentlySirs to punch above their weight, flooding niches faster than in 2023.

Patterns echo the TTPs of resistance networks: grievance amplification via synthetics, mirroring Israel-Hamas misinformation waves where accounts like this seeded unverified outrage.

This clip warns of hybrid threats where grudges meet technology, demanding a vigilant fusion of tech and context.

▪ Sources

X Community Note on Macron Video | X Platform

Manipulated video misrepresented as French president | AFP Fact Check | AFP Team

Fact check: Russia’s influence on Africa | DW | Fact Check Team

@SilentlySirs Profile and Posts | X / Web Archives | N/A

Macron’s claim that Africans failed to say ‘thank you’ | CNN | N/A

Fake video claiming ‘coup in France’ goes viral | France24 | Observers Team

Engagement with fact-checked posts on Reddit | PMC / NIH | Multiple Authors

67 X accounts spread coordinated Israel-Hamas misinformation | NBC News | Brandy Zadrozny

Iranian Infrastructure Dependency Exposes State-Backed Influence Operations

◾ 1. BLUF

A House of Commons committee heard evidence on 18 Jan 2026 that 1,300 social media accounts promoting Scottish independence and anti-West narratives went silent during Iran’s internet blackout from 8 Jan 2026.

These bots show strong ties to Iranian state actors through infrastructure dependency and content patterns.

The case exposes how domestic crises can unmask foreign influence operations, offering defenders rare attribution windows and highlighting platform gaps in bot detection.

◾ 2. Context

Iran has faced internal unrest, prompting its second major internet shutdown in months. The first occurred in Jun 2025 amid clashes with Israel; the latest, from 8 Jan 2026, aimed to stifle calls for regime change. Such blackouts restrict domestic access while revealing overseas operations reliant on Iranian servers or operators.

UK politics is rife with Scottish independence discussions. Polls hover around 49% support, keeping the issue alive despite the 2024 vote setback. Platforms like X amplify divisive chatter, where algorithms favour outrage over nuance and allow foreign interference.

◾ 3. What Happened

Ciaran Martin, former NCSC chief, faced questions from Emily Thornberry’s Foreign Affairs Committee on 18 Jan 2026. He cited UK Defence Journal work on 1,300 X accounts that promoted Scottish nationalism, Brexit grievances, and BBC criticism. These accounts went dark precisely when Iran cut the internet during protests.

Cyabra, a disinformation tracker, first flagged the issue in mid-2025. Their forensics revealed clusters of fake profiles with AI-generated images, synchronised posts, and unusual VPN trails back to Iran. Pre-shutdown, they amassed 224 million views; the drop confirmed the link.

Emily Thornberry pressed, ordering X, Meta, and TikTok executives to explain. The government expanded its foreign meddling investigation under the Online Safety Act. No takedowns yet, but the hearing highlighted the scale.

◾ 4. Classification

This qualifies as malinformation.

The bots distorted real debates such as Scottish polls and BBC disputes into wedges, but the core facts remain intact.

Coordination is evident with 1,300 profiles in tight networks, outage-related halts, and post-resume shifts to praising Iran indicate deliberate weaponisation. Organic nationalists lack the technological polish or Iranian fingerprints. The intent was to divide Britons cheaply while Tehran faced internal turmoil.

◾ 5. Tactics and Methods

Operators chose X for its speed and rage-inducing algorithm. Posts about independence or BBC bias trigger shares before fact-checks can intervene. X reaches UK politicians quickly, unlike TikTok’s youth demographic. They layered Facebook crossposts for longevity to evade single-platform bans.

Volume drove scale. Thousands of posts in weeks, with mutual retweets, inflated reach to 224 million views, but the Iranian network dependency exposed them. VPN failures during blackouts revealed the home base. The sophistication is mid-tier, employing professional tools but with fragile operational security. Platforms lagged as X’s tools detected clusters too late.

◾ 6. Implications

This outage exposed the bot network’s core weakness in one fell swoop. Operators tied their foreign interference to Iran’s domestic net. When blackouts occurred, the entire operation froze. That single point of failure revealed the command-and-control structure. Iranian planners overlooked how their own repression tools would undermine overseas efforts.

Iran may shift to cloud proxies next if protests continue. Real-time state net monitoring flags operations early. Platforms face Ofcom fines if detection fails. Policymakers must prioritise funding behavioural analytics over keyword searches.

This may also imply that if another country with foreign interference activities on social media shuts down its entire internet infrastructure, similar vulnerabilities could be exposed.

◾ Sources

UK Defence Journal reporting on Iran bots raised in inquiry | UK Defence Journal | Lisa West

When Iran's internet went down during its war with Israel, so did bot networks spreading disinformation | Cyabra via SAJR | Deborah Danan

Uncovering Iran's Online Manipulation Network | Cyabra | Cyabra Team

UK says pro-indy Scotland X accounts went silent as Iran cut net | New Arab

Iran leans into state-run intranet amid lingering blackout | Iran International

Iran Is Cut Off From Internet as Protests Calling for Regime Change Intensify | New York Times | Farnaz Fassihi, Pranav Baskar and Sanam Mahoozi

Fabricated de Minaur Quotes Fuel Scam Traffic

◾ 1. BLUF

Vietnam-operated Facebook pages fabricated inflammatory political quotes from Australian tennis star Alex de Minaur, targeting Prime Minister Anthony Albanese and others during the 2026 Australian Open.

These operations appear profit-driven through clickbait links to scam sites, showing moderate coordination across pages but no clear state backing.

◾ 2. Context

Australia’s social media landscape remains ripe for foreign engagement bait. Tensions rose after the December 2025 Bondi Beach shooting, sparking waves of opportunistic disinformation and misinformation that blended tragedy with political divides.

Alex de Minaur, then world No. 7 and a national favourite, drew attention during the United Cup and Australian Open in January 2026. His matches against players like Hubert Hurkacz coincided with Albanese’s Labor government facing criticism from One Nation’s Pauline Hanson on issues such as immigration and cultural debates.

Vietnam-based page admins seized the opportunity: mix a clean-cut athlete with hot-button politics, add AI images, and funnel clicks to ad-heavy sites. This built on earlier network hits faking swimmer quotes and Bondi narratives, exploiting Australia’s event-driven news cycles where sport and politics often collide.

◾ 3. What Happened

The pages began posting about de Minaur around 15 January 2026, right as he competed in Melbourne.

One claimed he blasted Albanese for match-fixing after a United Cup win, wrongly calling it a loss: Albanese retaliated by labelling him a ‘mediocre player who blames others’.

Another had de Minaur threatening to quit over ‘political interference’, claiming the tournament was ‘bought off’.

A third attributed to him a boycott of ‘LGBT and Labor’, with Penny Wong responding on TV. Pauline Hanson supposedly defended him in a late-night rant against Albanese’s ‘despicable tricks’. His mother Esther appeared in an AI-generated image, condemning critics for their cruelty towards her ‘26-year-old boy’.

These posts surfaced on English-language Facebook pages run from Vietnam, using vague teasers like ‘shocking statement’ and hiding scam links in comments.

AAP FactCheck identified the pattern by 15 January, tracing admins and debunking each claim. This has been confirmed with follow-up investigations revealing no records existed, quotes misrepresented facts, and images clearly generated by AI. The network had shifted from Bondi fakes and swimmer narratives, posting dozens across various themes. The scale remained unclear: AAP referred to it as ‘a slew’, with prior phases exceeding 30 political items, but Meta shared no view or share counts.

◾ 4. Classification

This incident counts as disinformation. The quotes on referees, boycotts, and party attacks, are false, contradicted by zero public records, wrong match details, and AI visuals.

Tactics confirm intent with templated outrage hooks, coordination across sports-politics pages, and scam-link funnels indicate deliberate deception for profit.

◾ 5. Tactics and Methods

Operators chose Facebook deliberately. It offers vast Australian audiences, with algorithms that favour rage-bait and lag on image checks. They timed posts for evening peaks during de Minaur’s Open run.

Each post dangled betrayal hooks: a PM rigging referees, a mother pleading in an AI-generated face that appeared fake upon closer inspection, and boycotts stirring culture war tensions.

Interestingly, the scam links lurked in comments, evading main-post flags and funnelling clicks to ad-stuffed sites.

◾ 6. Implications

What stands out is the sport-politics mash-up with AI family images. This is a twist on clickbait, pulling apolitical stars like de Minaur into Albanese-Hanson conflicts. Previous operations targeted politicians directly; this launders outrage through celebrities, making it harder to dismiss as partisan. Real-time adjustments during the Open indicate that operators now track live scores, not just elections.

It slots into foreign profit mills from Vietnam and elsewhere, pursuing revenue in English markets.